January 15, 2025

sending pixels over the wire

Previously, I realized I could let Rack handle texture rendering, since Rack is already rendering textures. This is what we in the industry call an “omg duh wtf why didn’t i realize” moment. What this means is that I might be able to get away with not requiring a custom version of Rack. If I continue to extrapolate from there, I can see that there would no longer be a need to manage that custom Rack build for the user. That means that the VR frontend and Rack don’t necessarily need to be running on the same machine. And if that was the case, they wouldn’t have a shared filesystem to use to pass texture data from Rack to Unreal. What would that look like?

There are still a couple of potential roadblocks to ditching the custom Rack build, but I’m curious now and I want to take a little detour and try to send the pixels over the network. Luckily this is just a silly side project and I’m the boss here, so that’s exactly what I’ll do.

I want to stick with OSC to send the data for now, since that’s what I’ve been using up to now for all the other communications and it seems like a fun challenge. OSC has a data type called blob, which is just an array of unsigned 8-bit integers. We’re working with a theoretical maximum packet size of around 64 kilobytes, but because of the reality of networking technology, the actual maximum size if we’re sending over a network is more like 1500 bytes, or, more pessimistically, 1232 bytes. And that’s assuming ipv6. Right now, a rendered panel is an array of uncompressed RGBA pixel data, always exactly 4 bytes per pixel. A small panel is around 1 megabyte of data. So I’ll have to break that data up into chunks in order to send it in a bunch of packets.

Because OSC uses UDP, it is stateless. Fire and forget. Send your data into the ether and hope for the best. There’s nothing about the protocol that confirms the packets arrived at all. There’s no guarantee that they’ll arrive in the same order in which they were sent. But we need to rebuild the image on the other side. We’ll need to know the order, and we’ll need every chunk.

So that’s the problem defined.

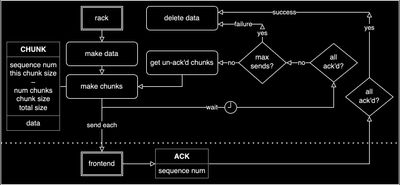

I wanted to see what I could come up with on my own before looking into other folks’ recommendations, and I’m proud that I came up with something that pretty closely matches some of the recommendations I found afterward. Here’s what my initial solution looks like:

We chunk up the data, sending each chunk over to the front end. Each packet contains its chunk of data, plus all of the metadata necessary to reconstruct the data. Upon receipt, the front end sends back an ACK (acknowledge) message with the sequence number of the chunk it just received. When Rack receives an ACK, it checks to see whether all chunks have been received, and proceeeds to the success state if so. After the initial send, Rack also sets up a delayed action to check for un-ACK’d chunks and resend them. If any chunk has been sent more than a configurable number of times, it is considered failed and the entire process proceeds to the failed state. In the diagram, a failure and a success are treated the same way: dispose of the data and move on. In reality, we might want to retry from the beginning, notify the user, and/or tell the front end to discard the partial data.

Initially, we send over the dimensions of the model. That's the shape change you see at the beginning of the video. From there out, the chunks are transferring. This particular texture is 820,800 bytes (about 802k, a little under a megabyte), and sends in 744 chunks. It’s pretty slow! 11.61 seconds. I’m sure there is some optimization to be done for some minor gains there, but next time we’ll take a look at compressing that data to see if we can't improve the performance more significantly.